AI could unleash biological weapons, make humans extinct: scientists

Technology

AI in the wrong hands could make humans extinct

(Web Desk) – Top scientists have urged that AI is not 'a toy' and could be catastrophic for humankind.

In a report calling for stricter regulations on the tech, experts say artificial intelligence (AI) - in the wrong hands - could be used to produce biological weapons and someday make humans extinct.

Scientists have long been calling on the world leaders for stronger action against the risks AI poses to the world.

But in a new report published today, experts warn that progress has been insufficient since the first AI Safety Summit in Bletchley Park six months ago.

Governments have a responsibility to stop AI being developed with worrying capabilities, such as biological or nuclear warfare, the report argues.

“The world agreed during the last AI summit that we needed action, but now it is time to go from vague proposals to concrete commitments," Professor Philip Torr, Department of Engineering Science, University of Oxford, a co-author on the paper, said.

With AI outperforming humans in many eyebrow-raising behaviours, such as hacking, cybercrime and social manipulation, regulations that prevent misuse and recklessness are lacking, the report adds.

Experts believe AI may soon pose threats humans haven't even thought about - or planned for.

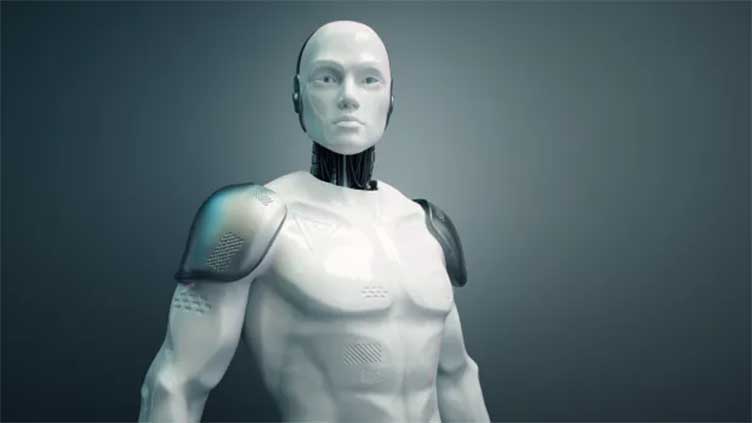

AI chatbots and future humanoids could use manipulation tactics to gain human trust and lure decision makers into actions that will advance its corrupt goals.

To avoid human intervention, AI could be capable of copying their algorithms across global server networks, the report cautions.

Stuart Russell OBE, Professor of Computer Science at the University of California at Berkeley, said: “This is a consensus paper by leading experts, and it calls for strict regulation by governments, not voluntary codes of conduct written by industry.

"It’s time to get serious about advanced AI systems. These are not toys.

"Increasing their capabilities before we understand how to make them safe is utterly reckless."

To the Big Tech companies that argue safety regulations will stifle innovation, Russell added: "That’s ridiculous. There are more regulations on sandwich shops than there are on AI companies.”

A total of 25 of the world's top academic AI experts signed off on the report, including Geoffrey Hinton, Andrew Yao, Dawn Song, and the late Daniel Kahneman, who died last month.